Hello Magento Friends,

It is very important to configure the robots.txt file for your website from the SEO point of view. The robots.txt file contains a set of instructions that guides web crawlers that which page of the website to be indexed and analyzed.

Robots.txt file establishes a relationship between your website and web crawlers. Hence, it is very essential to configure the robots.txt file for Magento 2.

Steps to Configure Robots.txt in Magento 2:

Step 1: From the Admin Panel, go to Content > Design Configuration and select your desired Store.

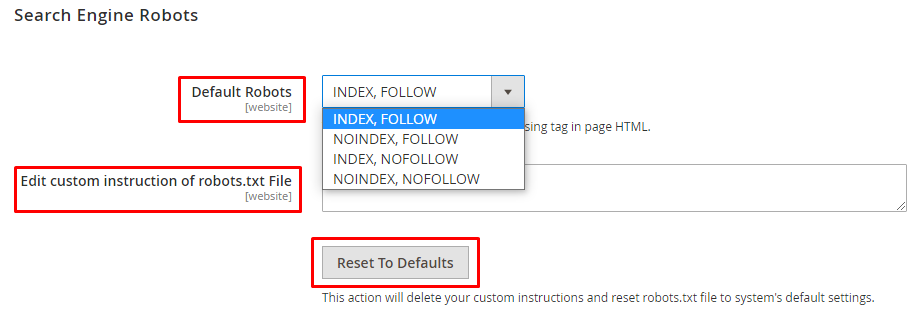

Step 2: Expand the Search Engine Robots Section.

- Choose the option for the Default Robots field. There are several options available,

- INDEX, FOLLOW – Web crawlers index the page and follow the link on the page.

NOINDEX, FOLLOW – Web crawlers do not index the page but follow the link on the page.

INDEX, NOFOLLOW – Web crawlers index the page but do not follow the link.

NOINDEX, NOFOLLOW – Web crawlers neither index the page nor follow the link on the page.

- In the Edit Custom Instruction of robots.txt File field, enter custom instructions for web crawlers if any.

- Reset to Defaults button erases all your robots.txt settings and reset back to default.

Click Save Configuration and flush the cache to reflect the changes.

Learn – different ways to flush the cache.

Custom Instructions for Magento 2 Robots.txt:

Allows Full Access

User-agent:* Disallow:

Disallows Access to All Folders

User-agent:* Disallow: /

Default Instructions

Disallow: /lib/ Disallow: /*.php$ Disallow: /pkginfo/ Disallow: /report/ Disallow: /var/ Disallow: /catalog/ Disallow: /customer/ Disallow: /sendfriend/ Disallow: /review/ Disallow: /*SID=

Restrict Checkout and Customer account

Disallow: /checkout/ Disallow: /onestepcheckout/ Disallow: /customer/ Disallow: /customer/account/ Disallow: /customer/account/login/

Restrict Catalog Search Pages

Disallow: /catalogsearch/ Disallow: /catalog/product_compare/ Disallow: /catalog/category/view/

Restrict Common files

Disallow: /composer.json Disallow: /composer.lock Disallow: /package.json

Restrict Common Folders

Disallow: /app/ Disallow: /bin/ Disallow: /dev/ Disallow: /lib/ Disallow: /pub/ Disallow: /phpserver/

Restrict Technical Magento Files

Disallow: / cron.php Disallow: / cron.sh Disallow: / error_log

Disallow Duplicate Content

Disallow: /tag/ Disallow: /review/

Magento 2 Robots.txt Example :

Create robots.txt in Magento root path and add the below code.

NOTE: If your side running in the pub directory so create robots.txt under the pub directory.

# Default Instructions User-agent: * Disallow: /index.php/ Disallow: /*? Disallow: /checkout/ Disallow: /app/ Disallow: /lib/ Disallow: /*.php$ Disallow: /pkginfo/ Disallow: /report/ Disallow: /var/ Disallow: /catalog/ Disallow: /customer/ Disallow: /sendfriend/ Disallow: /review/ Disallow: /*SID= Disallow: /enable-cookies/ Disallow: /LICENSE.txt Disallow: /LICENSE.html # Disable checkout & customer account Disallow: /checkout/ Disallow: /onestepcheckout/ Disallow: /customer/ Disallow: /customer/account/ Disallow: /customer/account/login/ # Restrict Catalog Search Pages Disallow: /catalogsearch/ Disallow: /catalog/product_compare/ Disallow: /catalog/category/view/ Disallow: /catalog/product/view/ # Restrict Common Folders Disallow: /app/ Disallow: /bin/ Disallow: /dev/ Disallow: /lib/ Disallow: /phpserver/ # Disallow Duplicate Content Disallow: /tag/ Disallow: /review/ # Sitemap Sitemap: https://domain.com/sitemap.xml

Conclusion:

Hence, this way you can Configure Robots.txt in Magento 2. If you need any help mention it in the comment section or reach our support team. Do share the article with your fellow colleagues and stay updated with us!

Happy Reading